This is part two in a series on A/B testing and how to get it right.

So far, I covered the fact that, in practice, most A/B tests don't yield results, largely because folks lack a better understanding of how to run them properly — and getting wins is not an easy task. I also covered how to make sure you’re getting accurate results by obtaining a basic understanding of statistics and the technicalities behind A/B testing.

In sum, if you read part one, you know that if your client doesn't have the necessary traffic levels, A/B testing is not going to work for now .

.

But if your client has sufficient traffic, keep reading. In this article, I'll explain:

- The types of online experiments and levels of sophistication.

- The right approach to A/B testing to ensure the right expectations.

- How to ensure your test ideas have the high potential for being a win.

Read on!

The types of online experiments and levels of sophistication

A/B testing technically is called A/B/n testing (“n” refers to the number of variations being tested) but there are other types of controlled online experiments you can run on your client's site:

In addition, there are two main A/B/n testing strategies:

- Iterative (sometimes called incremental testing)

- Innovative (sometimes called radical testing)

Every type and strategy of experimentation has it's place and purpose. But in part one, I mentioned that in order to deliver noticeable results faster (and reduce the number of inconclusive outcomes), your best chance is probably testing bigger changes (innovative A/B testing + multipage testing) to key pages of your client’s store (homepage, collections page, product page, cart and site-wide changes). This will likely be noticed by visitors and affect their decision making process (and thus you'll see a significantly different outcome).

Here, I’ll go a bit deeper into why, in the majority of cases, testing strategy is really a simple choice, and how everything depends on the circumstances and culture of your client.

To put it simply, if your clients are at the beginner level when it comes to experimentation, and many Shopify stores are, then my suggestion above is right for you.

For three simple reasons:

1. There is a limit on how scientific and sophisticated merchants can be with their experiments based on their traffic volume and monthly purchases (even if it's sufficient for A/B testing). You need huge volumes of data (traffic and monthly purchases) to be even solidly scientific (think enterprise level ecommerce).

With more data and volume you are also more sensitive to any kind of change in your online performance (at a certain point, a small drop in your conversion rate could mean hefty monetary losses, for example) which in turn should make you more interested in sophistication and data science.

"With more data and volume you are also more sensitive to any kind of change in your online performance."

2. Your clients are likely not that interested in sophistication and definitive answers (unless it's a cultural thing). They’re likely more interested in the results, and how much more profit they yield (this could be the case even if they have huge volumes of data).

3. Fast, and noticeable results is what gets you (and your client) to next level of online experimentation (and you not getting fired). Wins build momentum and trust in the process.

Therefore, unless your client has more than 100,000 unique monthly visitors (and 1,000 monthly transactions), other forms of online experiments and strategies are not really relevant at this point.

And even if they do have the necessary numbers, for both of you the incentive should be to deliver noticeable results fast. This can be achieved more effectively with innovative A/B/n testing and multipage A/B/n testing, with the fewest amount of variations as possible (ideally just two, A being the original, and B your variation). For the sake of simplicity, I'll be referring to both simply as A/B testing.

If the above is not the case (and there are always exceptions), here are some good resources on other forms of experiments and strategies:

- When To Do Multivariate Tests Instead of A/B/n Tests

- Bandit testing: The Beginner's Guide to A/B Testing During Black Friday Cyber Monday

- Iterative Testing vs. Innovative Testing: Which Works Best?

Let's look at how to make A/B testing work for you and your clients.

You might also like: How to Use Google Analytics to Improve Your Web Design Projects.

The right approach to A/B testing

It's important to cover the approach and mindset of A/B testing — knowing when to use it and for what reasons, and more importantly what to expect from it. Otherwise, disappointments will be inevitable.

Always measure money

You reduced bounce rate? You increased “Add to cart” clicks? Great, but how do these changes affect your client’s revenue? There isn't a always clear correlation; lower bounce rates do not lead to more revenue, and even higher conversion rates don’t always lead to more revenue (e.g. There can be a slight drop in conversions but a bigger increase in AOV, which leads to more revenue).

Don't get me wrong, these are important metrics and should be tracked (they can give you important insights), but for an ecommerce store they should always be secondary to:

- Revenue per visitor (always comes first)

- AVO (average order value)

- Ecommerce conversion rate

But even more important, never forget that revenue does not equal profits , so pay attention to your client’s profit margins. Especially if your client is a reseller, who typically has lower margins.

, so pay attention to your client’s profit margins. Especially if your client is a reseller, who typically has lower margins.

A/B testing is a long-term strategy

If you’re planning only a few A/B tests (one or two), there's a high chance you won't be able to achieve the outcomes you want (or have promised to your client) for several important reasons:

- It's hard to get wins on your first tests — Especially if you've never done this before (unless you're lucky). Because, if you remember from part one, it's hard to predict the outcome; even seasoned experts are often proven wrong. You will miss often, especially on your first attempts.

- You might miss the win even if there is one — Remember the statistical power? What that 80 percent means is that if there is a statistically significant difference between the two variants, eight times out of ten, the test will pick up on that difference. In other words, two times out of ten the test will fail to recognize the difference between the two variations, even though a difference exists.

- Even if you get a win, it might not materialize in real life — This is something you rarely find in A/B testing case studies or blog posts, but the outcome of a test is really just a prediction, and not a 100 percent guarantee.

Overall, measuring the exact monetary value of an A/B test is not an easy task.

Remember the margin of error? What it means is that that over the long run, your 20 percent lift could turn out to be just a 5 percent lift.

And you might still get an occasional imaginary win (false positive), even if you followed all the critical A/B testing practices (not stopping too early, sufficient sample size, etc.).

Plus, there might be some other factors you didn't account for: sudden shifts in the client's market, client makes a change to their site without you knowing, which eats up your gains (humans are not the greatest planners).

Therefore by implementing a winning variation, you are essentially making a bet (albeit a bet that's a lot more accurate than a simple guess, or assumption).

Hence it's very important to educate your clients on this, as they might develop unrealistic expectations and blame you when the reality does not match them.

Your best bet is a cycle of tests

The goal is to go through a cycle of tests, find winners, stack them upon each other, and thus significantly improve your client's online profitability (with higher conversion rate, AOV and revenue per visitor), and then repeat.

In other words, you'll just make more high probability bets and win in the long run. Because with more test, you'll likely increase the number of wins, which will outweigh the number of false wins.

You don't get sustainable results from just a few tries (only if you are lucky, or appear to be).

When you run more than one test, you can also put your trust and confidence in the testing program/plan, not just the individual outcomes of each test, which, as you know by now, are way too unpredictable.

Furthermore, with each test you get a better understanding of your client's business and customers, which increases your chances of getting more wins (if done right, but still limited)

And not to mention that with each test, you will get better at it (just like with everything in life, theory without real-life practice is nothing).

Are you building products for an international audience? Learn how pseudo-localization can help make the process easier.

How to ensure your test ideas have high potential for being a win

Just blindly running as many A/B tests as possible (testing random ideas) will not get you very far .

.

What people typically do is either copy other case studies or test best practices, firmly believing that they know what works best, or just testing whatever they can think of.

This is not a very efficient strategy because you're just testing your guesses, essentially shooting in the dark and hoping to hit something. You will miss often and your win rate will be low. Your enthusiasm will wind down and frustration will kick in.

With this approach, you may hit something once in awhile. It is estimated that approximately only one in seven tests (at best) produces positive results this way. I doubt your client has the patience and time for that.

There is a better way...

A/B testing is just one tool in a much broader optimization process

Usually, testing comes into play when there is a question about measuring the impact of a design change in relation to CRO (conversion rate optimization), but sometimes it also happens in UX/UI, product management, or with digital marketing in general (to put it simply when you try to measure the changes you made, make sure it’s actually better than it was).

A proper process puts a lot research and thinking into every test idea.

You are not guessing anymore; you use data to back your decisions about improvements. Top teams have their own processes, which are adapted to their unique backgrounds and capabilities, but the building block of all these processes is the scientific method. In short, it looks like this:

- Do research and find out where and why (quantitative and qualitative) your client's store is underperforming.

- Develop hypotheses (improvement ideas) based on the data from the research.

- Run tests to validate these hypotheses (create your first cycle of tests).

- Rinse and repeat.

Teams that use this process have a far higher win rate, starting at 30 percent and going all the way up to 90 percent for more experienced teams.

Some great guides that will get you started in the right direction:

- How to Conduct Research That Drives A/B Testing

- A/B Testing Mastery: From Beginner To Pro in a Blog Post

- Research-driven CRO guide

Probably, not what you wanted to hear, but in the very beginning of this series, I stated and that successful A/B testing is not easy, and requires a lot effort and time.

You might also like: Strategy Meets Technology: 4 Prime Targets for Conversion Rate Optimization.

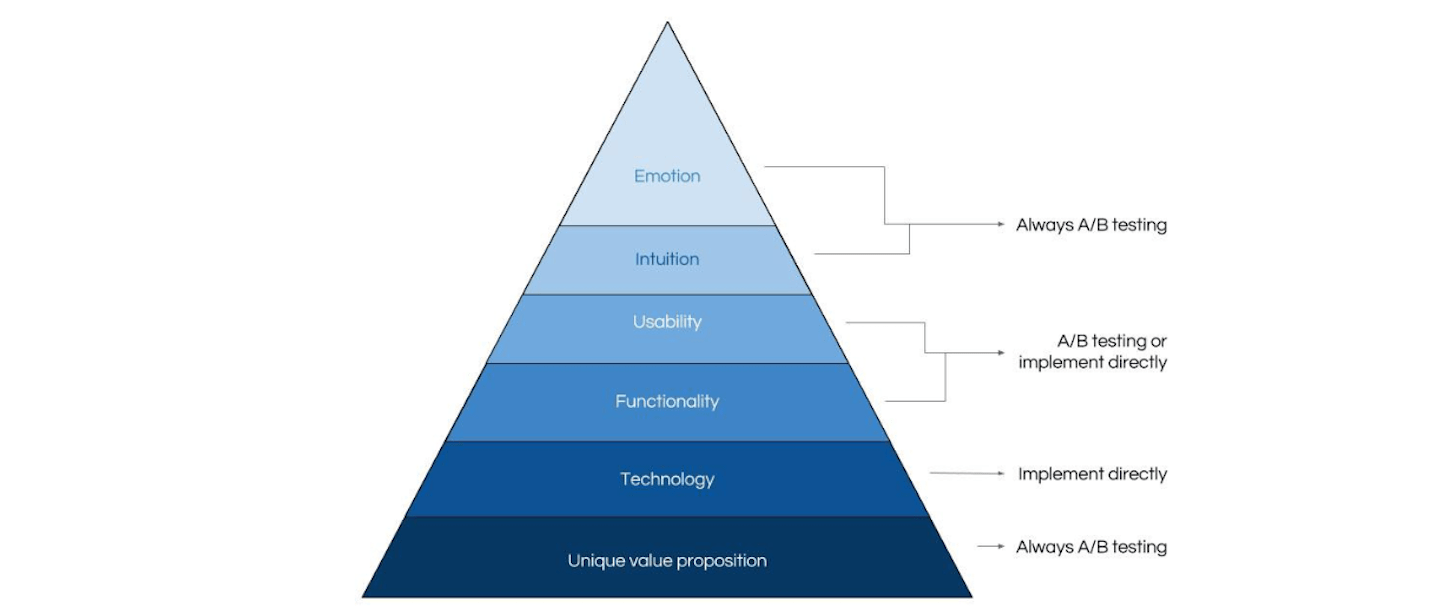

Not everything needs to be tested (and can be tested)

The beauty about the optimization process is that it's not all about testing, which is in fact just the last part of the optimization process.

During the research, you'll often uncover obvious improvements that don't need any testing at all.

Therefore just because you can't A/B test doesn't mean you can't optimize your client's store.

And just because you can A/B test, doesn't always mean you have to. If the risk is minimal, and the potential for an impact is high (based on the insights from research) it might be worth not testing it at all, and just making the bet right away.

Final words

There isn't a one size fits it all solution here, and there are nuances depending on you and your clients unique circumstances (and your arrangement).

But the key thing to remember here is this…

If you’ve ever followed any top performers in any field, and wondered what sets them apart from the rest, you'll notice that they put a lot more emphasis on processes and systems, continuously improving and getting better, rather than focusing on single outcomes and shiny tactics.

A/B testing is no different.

Read more

- Writing a Freelance Invoice That Gets You Paid Faster

- Teaching Code: A Getting Started Guide

- 5 Ways to Ensure Your Client's Online Business is Tax Compliant

- How to Develop an Effective Creative Brainstorming Process

- 10 Apps to Boost Your Content Marketing Efforts

- Free Industry Report] The Opportunities, Threats, and Future of the Consumer Electronics Industry

- How to build a Shopify POS Pro demo store

- Go Back to School With These Online Courses for Continuous Learning

Will you be running A/B tests for any of your clients? Let us know in the comments below!